An AI agent, also known as an autonomous agent or intelligent agent, is a generative AI program capable of interacting with its environment and accomplishing predetermined tasks autonomously. These artificial intelligence agents use the reasoning capabilities of large language models (LLMs) to autonomously develop plans for achieving a given task. The AI agents are further empowered to access real-world tools (e.g., a web browser) to execute their plans.

So how are these agents different from adjacent technologies like AI copilots? Though some have agentic AI built into them, copilots aren’t intended to function autonomously. Instead, they augment employees’ abilities through direct real-time interaction. Think of a customer service copilot retrieving information and suggesting responses to help a contact center agent resolve a complex query, or a field service copilot helping a technician repair an engine.

Back to building AI agents, let’s say we want to task one with ordering a pizza. Our instruction to the agent may look like:

The AI agent will respond with a comprehensive plan to execute on the task (truncated for conciseness):

By making sure the AI agent has the right tools, we empower it to complete the task on its own. In this tutorial, we’ll detail how to build your own autonomous plan-and-execute agent using Python, OpenAI, and Beautiful Soup.

Set Up the AI Agent’s Tools and Memory

We’re going to build a plan-and-execute agent that’s capable of accepting instructions and navigating within the context of a web browser using links on the page. To accomplish this, we need to equip our agent with some capabilities:

- Tools: These are functions that the LLM can choose from and has access to. In this project, we’ll give it the ability to open a Chrome browser, navigate to a given page, and a function to parse out links on the page.

- Memory: This is a data structure that allows the LLM to remember things that have been done when planning future tasks. This includes bread crumbs for itself as well as what tools have been called. Memory can be kept short-term in the agent or long-term to track the overall goal progress.

With these core components in place, we can now set up our plan-and-execute loop.

Create the Plan-and-Execute Loop

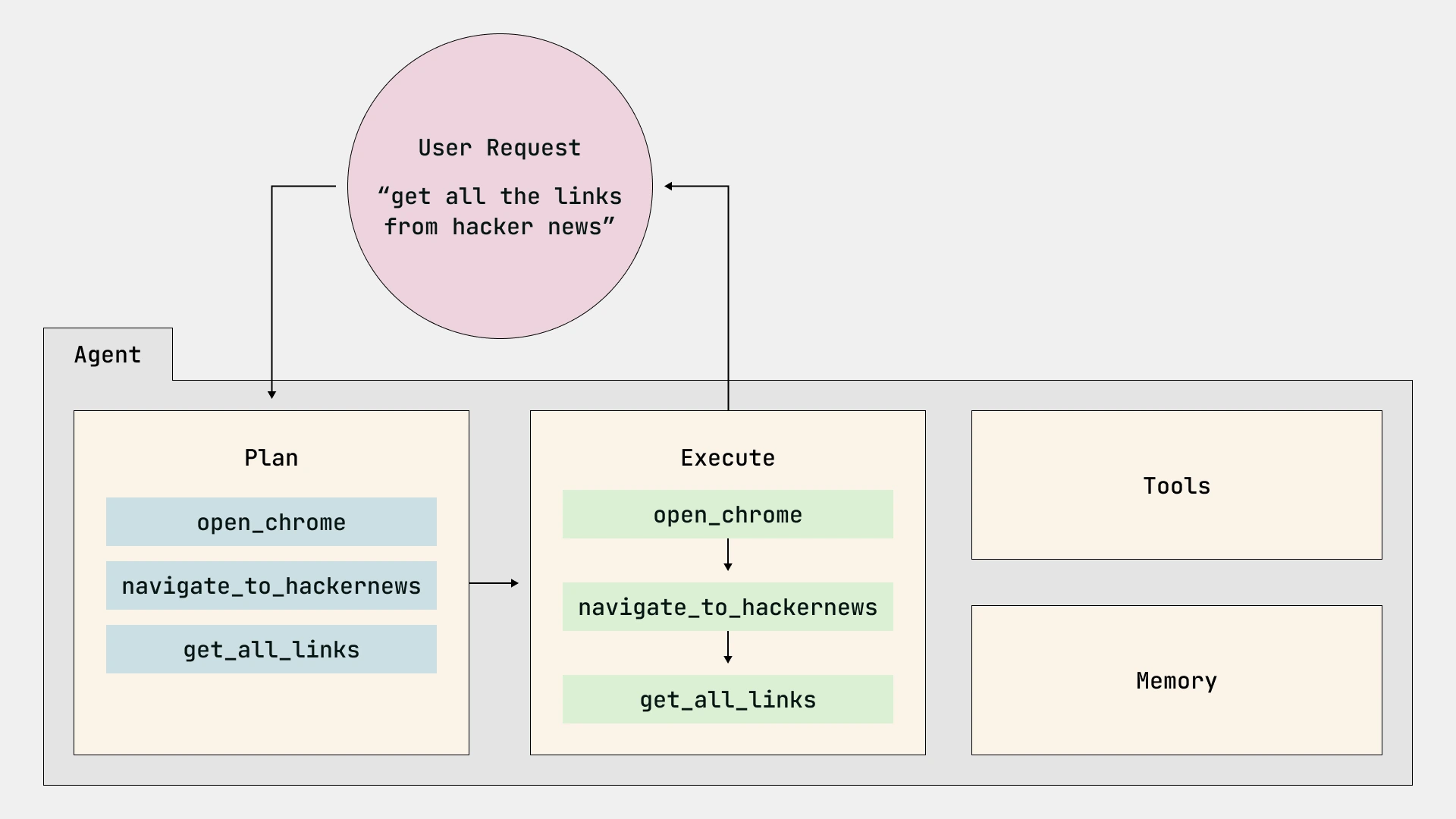

Agents work by analyzing their goals, creating steps that will reasonably accomplish that goal, and executing them using tools.

The heart of this process is the plan-and-execute loop. Our example is built around the following plan-and-execute loop:

1. First, we provide the agent with an instruction through the terminal. In this case, “Get all the links from Hacker News.”

2. Next, the agent creates a plan to accomplish the task considering its instructions and the tools that it has at its disposal. Given the tools we outlined, it responds with the following steps:

- open_chrome

- navigate_to_hackernews

- get_all_links

3. Last, with a completed plan, the agent moves on to the execute phase, calling the functions in order. When a function is called, the memory updates with the tool that was called and the related metadata, including the task, parameters, and result of the call.

Enhance the Agent’s Memory, Prompts, and Tools

To give our tools greater functionality, we'll import several libraries. We chose these tools to showcase different ways agents can interact with a system:

subprocessallows us to open system applications.requestsandBeautifulSoupallow us to get links from a URL.OpenAIallows us to make LLM calls.

Setup:

Memory

For our AI agent’s plans to be effective, we need to know what the agent has done and still needs to do. This step is often abstracted in frameworks, making it important to call out. Without updating the agent’s “state,” the agent won't know what to call next. Think of this as similar to conversation history in ChatGPT.

Let’s create an `Agent` class and initialize it with variables that we can use for completing our task. We will need to know what tasks have been given and the LLM’s responses to those tasks. We can save those as `memory_tasks` and `memory_responses`. We will also want to store our planned actions along with any urls and links we might come across.

Plan-and-execute prompts

In order to make and execute a plan, we need to know what tools we have and a memory of the steps already taken.

If we want to navigate to a website and get links we should call out some possible tooling around opening Chrome, navigating to a website, and getting links. We can be clear in structuring a role, task, instructions, tools available, and the expected output format. With this, we are asking the LLM to plan the steps needed to accomplish our task with the given tools.

The update_system_prompt was added to show specifically the addition of “memory” being added during the execution of this workflow. Here the only major differences between the planning and execution prompts are in the addition of our memory and the response format of the prompt outputs.

When completing a task, the LLM will look at the previous memory of tasks completed and choose a tool to use for the given task. This will return a tool name along with any variables needed to complete the task in JSON format.

The openai_call function will allow us to call OpenAI and request the different format_responses to showcase the differences between planning and execution. The JSON format is important here because we are using the responses of what tool to use to actually run that function.

Tools

Tools are functions that an agent has access to. Tools vary widely depending on the tasks the agent aims to accomplish.

In the code below, there are three specific tools that showcase how we can open a browser, navigate to a website, and get links. The way that these tools will be called is shown below when we put it all together. For now, think of this as a function an LLM can choose to call or not based on the current task of the plan.

The def open_chrome() tool can use Python’s subprocess to open a Chrome instance that will allow for navigation.

The def navigate_to_hackernews() tool is hard-coded for this demo to showcase the idea and should be expanded to allow any URL.

The def get_https_links() tool uses the Beautiful Soup library. It will grab the URL of the page we are on and get all of the links.

Bring All Your Work Together

Here, we create a function to plan and then execute that same plan.

To do this, we need to:

- Send the planning system prompt to the LLM to create a plan and save those tasks as a list.

- For every task in that list:

- Keep track of the task given to the agent.

- Pull in the task prompt with the history.

- Call the LLM to choose a tool based on the given task and return as JSON.

- Update our memory with the LLM response.

- Match the tool called by the LLM to one of the tools available.

- Run the function called.

- Start the next task.

Here’s what this looks like in our code below:

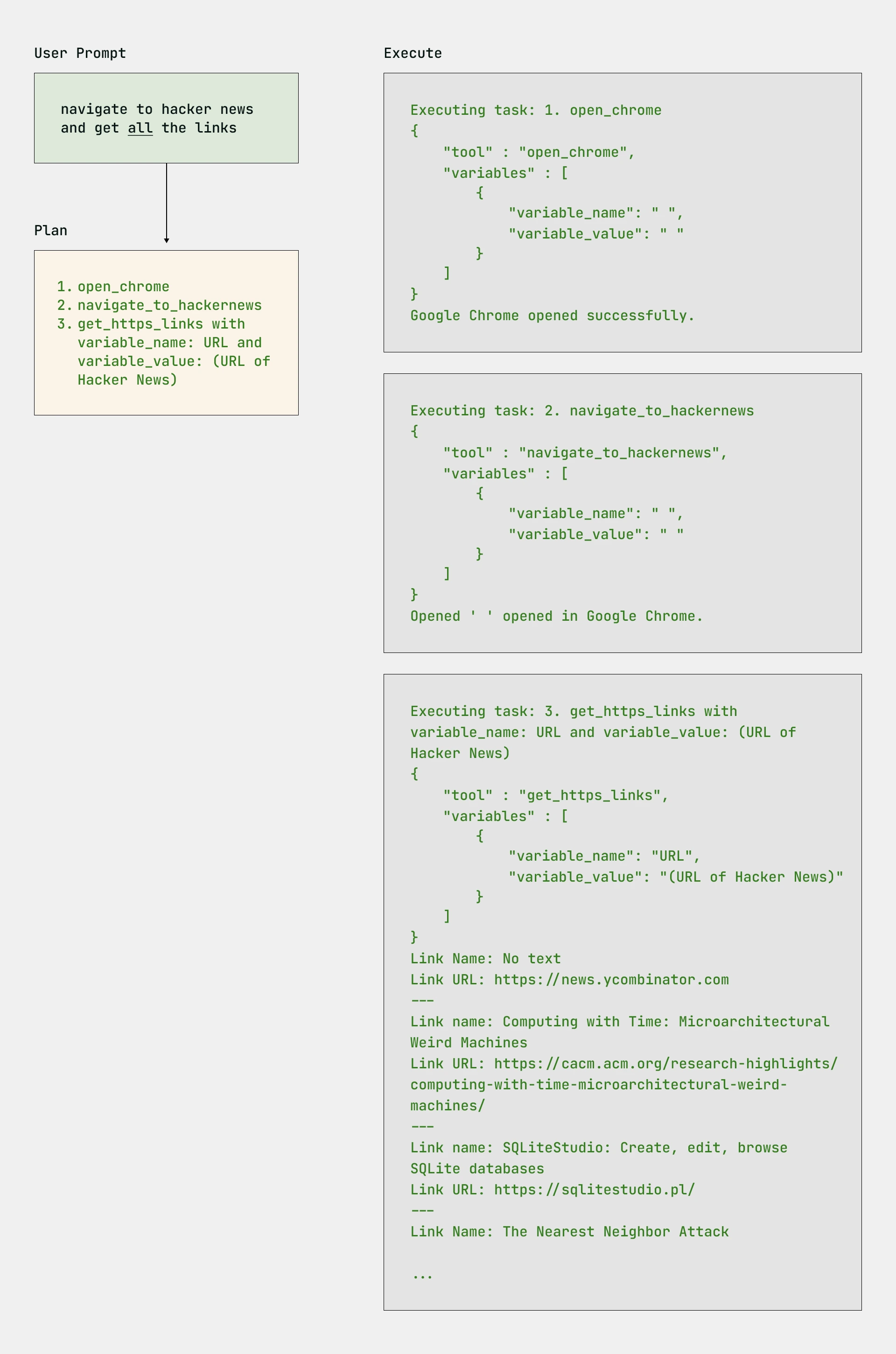

With our agent in place, we can now run a request and see how the agent responds.

“Get all the links from Hacker News.”

The plan the agent generated:

The outputs of each of the tasks being run:

Pitfalls When Building Plan-and-Execute Agents

If we start every agent call by making a plan, we don’t always have the flexibility to handle problems that arise. How we handle failures and what tools the agent has to mitigate these failures is very important. In our plan-and-execute approach, it isn’t easy to update our plan unless everything is in a try-catch and we update our plan on the failure.

More problems that could arise include:

- One call for planning, and multiple calls for execution. While we presented things this way to illustrate the steps, using a single prompt to both plan the next step and execute allows for a more dynamic agent.

- This is a linear plan created at the onset, so if we run into any problems, we aren’t able to continue effectively. This is where we can have an agent reflect on the outcome and choose the next best approach.

- The AI agent will fall apart if it has to interact with any graphical user interfaces (GUIs). This is where multiple agents can come in or custom tools to allow for navigation of a specific application.

- As we add more tools, the prompts can become a lot. It would be best to start storing the tools outside of the prompt as they grow large and scoping agents for specific tasks.

- We are responsible for creating all the tools. Emerging standards look to establish best practices for interactions with service providers.

- The memory is all being saved in the class itself now. We should move this to a central location to persist this data.

Fortunately, most of these issues can be solved with more advanced techniques and concepts.

Advanced Techniques and Concepts for Building AI Agents

With our understanding of plan-and-execute agents, we can build on what we know to create agents with more advanced capabilities. These include utilizing agentic frameworks and creating specific architectures.

While we covered the basics of building a plan-and-executive agent in this blog, you can push its abilities even further by incorporating advanced techniques such as:

- ReAct (Reason and Act): This merges the planning and execution prompts into one, allowing the prompt to think one step at a time.

- ADaPT (As-Needed Decomposition and Planning for complex Tasks): Perhaps best thought of as an extension of ReAct, ADaPT plans step by step, but also allows the agent to recursively break down problems when they arise or when steps are too large.

- Reflexion: This allows an agent to know how well it did or if it needs to try again after a task has been completed.

As for advanced concepts that you could incorporate into existing agents or alongside other techniques, you could explore options such as:

- Recursion: Agents call their own functions to keep tasks going until the problem is solved. Think of this as while True and be careful about infinite loops. This holds a lot of power in autonomy.

- Multiple agents: These are perfect if you require agents to talk to one another or orchestrate a more complex task. Not every agent needs access to all tools or systems.

- Data standardization: Standards such as Anthropic’s Model Context Protocol (MCP) are emerging to leverage tool and data service integrations with AI. Efforts around establishing best practices for how AI agents interact with data services is becoming increasingly important.

- Frameworks: Agentic frameworks allow for the creation of agents, but often have their own paradigms that are built off of the ideas presented above. Autogen, LangGraph, and LlamaIndex are a few examples of agentic frameworks.

With advanced techniques and concepts, you can build more robust agents capable of things like comparing different scenarios when making decisions, or helping customer service chatbots handle more complex queries.

Start Building AI Agents Tailored to Your Business Needs

In this post, we looked at the anatomy of an AI agent through a practical plan-and-execute example, showcasing how agents use planning, execution, tools, and memory to accomplish tasks. Our basic implementation highlighted key considerations like tool selection and pitfalls, while also introducing more advanced concepts such as ReAct and multi-agent systems.

Mastering these concepts will prepare you to build agents capable of handling dynamic and generative tasks, including for high-value use cases like:

- Process automation where agents enhance workflows by navigating internal systems, aggregating data from multiple sources, and generating reports.

- Product development where AI-powered features can adapt to user requests and interact with various APIs and services.

WillowTree can accelerate your efforts by helping you implement the right frameworks and standards to develop and deploy AI agents. Learn more about our Data & AI consulting services.